Monitoring and evaluation (M&E): A complete guide that actually works

June 4, 2025 2025-06-04 7:59Monitoring and evaluation (M&E): A complete guide that actually works

Running a program without monitoring and evaluation is like driving blindfolded. You might think you’re headed in the right direction, but you’ll never really know until you crash into something. If you’re managing projects or trying to create real change, you need to track whether your efforts are working. That’s where monitoring and evaluation (M&E) comes in. It’s not some bureaucratic nightmare – it’s your best tool for understanding what’s working, what’s failing, and how to fix things.

Content

Introduction to monitoring and evaluation (M&E)

Defining M&E

Monitoring and evaluation are like the pulse of any organization trying to make a difference. They give you the insights that separate successful programs from expensive mistakes.

Here’s the simple version: monitoring tracks your progress while you’re working. Evaluation takes a step back and asks whether you actually achieved what you set out to do.

Think about planning a road trip. Monitoring is like checking your GPS every few minutes to see if you’re still on the right route. Evaluation is sitting down at the end and figuring out what went right, what went wrong, and how you’d plan the trip differently next time.

Most organizations create an M&E plan – basically a document that explains how they’ll track results throughout their program. Smart organizations treat this as a living document that gets updated regularly, not something that sits in a drawer gathering dust.

Key objectives of M&E

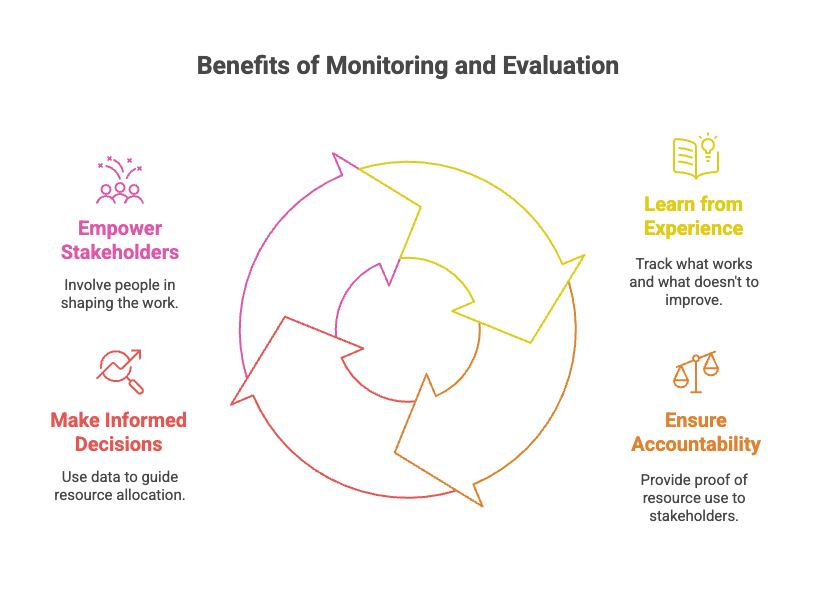

Why bother with all this tracking and measuring? Four main reasons.

First, you learn from experience and get better at what you do. When you actually track what works and what doesn’t, you can course-correct instead of repeating the same mistakes over and over.

Second, accountability matters. Your funders, the communities you serve, your board – they all want proof that resources aren’t being wasted. Fair enough.

Third, M&E helps you make smarter decisions. Data tells you where to invest your limited time and money for maximum impact. Without it, you’re just guessing.

Finally, good M&E actually empowers the people you’re trying to help by involving them in the process and showing how their feedback shapes your work.

Organizations utilizing M&E

Pretty much everyone uses some form of M&E these days. Government agencies track public programs to justify budgets. Nonprofits use it to show donors they’re making a real difference.

International development organizations are probably the biggest users. They have to prove results across complex, multi-year programs in challenging places. Even companies track their corporate social responsibility work this way.

The thing is, any organization trying to change something can benefit from M&E. The question isn’t whether you need it – it’s whether you’re doing it well.

Understanding monitoring

Defining monitoring

Monitoring is continuous assessment of programs based on real-time information about what’s actually happening. It’s systematic data collection that happens while your program is running, not after it’s over.

The goal is tracking progress against what you planned. If you said you’d train 100 teachers by month six and you’ve only trained 40, monitoring catches that gap early enough to do something about it.

Key components of monitoring

Monitoring usually focuses on four areas, and each one matters.

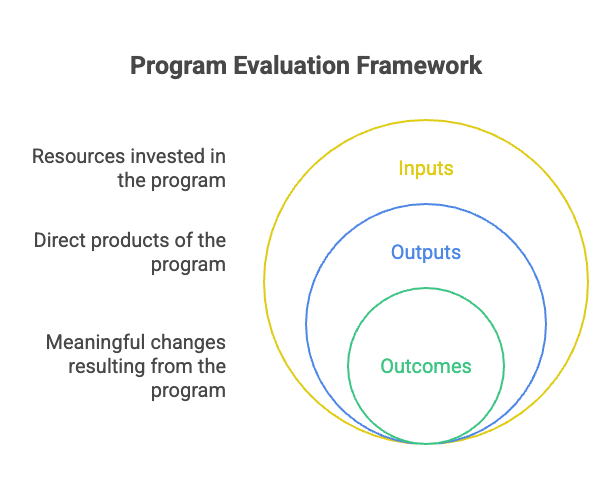

Input monitoring tracks your resources and activities. Are you actually using the staff, budget, and materials you planned for? Output monitoring looks at immediate results – how many people trained, services delivered, stuff produced.

Outcome monitoring gets more interesting. It examines whether your activities are creating the changes you wanted. Are those trained teachers actually using their new skills in classrooms?

Impact monitoring takes the longest view. Are communities actually better off because your program existed? This is the hardest to measure but often the most important.

Monitoring tools and techniques

The tools range from dead simple to pretty sophisticated. Performance indicators are your basics – things like number of people served, percentage of targets hit, cost per unit delivered.

For collecting data, you’ve got surveys, interviews, observations, reviewing documents. These days, mobile data collection platforms make field work much easier than old-school paper methods.

Dashboards and reporting systems help you spot trends and visualize progress. Modern M&E uses technology to make data collection and analysis faster and more accurate.

Exploring evaluation

Defining evaluation

Evaluation is the systematic assessment of completed projects or program phases. It determines relevance, effectiveness, efficiency, and sustainability by looking at whether you achieved your goals and why things turned out the way they did.

While monitoring happens continuously, evaluation is periodic and digs deeper. It asks the big questions: Did we succeed? What worked and why? What were the unintended consequences we didn’t see coming?

Types of evaluation

Different types serve different purposes. Formative evaluation happens during implementation to improve the program while it’s still running. It’s like getting feedback on a draft instead of waiting until the final version.

Summative evaluation comes after completion to judge overall success. Process evaluation focuses on how well you executed your plan. Impact evaluation tries to figure out whether your program actually caused the changes you observed.

Evaluation methodologies

You can evaluate using numbers (quantitative methods like statistical analysis) or stories (qualitative methods like case studies and focus groups). The smart approach uses both.

Numbers tell you what happened. Stories tell you why. Using multiple methods together – what researchers call triangulation – gives you a complete picture that neither approach could provide alone.

The relationship between monitoring and evaluation

Complementary nature of M&E

Monitoring and evaluation work as an integrated system. Every program’s M&E plan looks different, but they all need the same basic structure and key elements.

Think of monitoring as gathering ingredients and evaluation as cooking the meal. Without good monitoring data, evaluation has nothing to work with. Without evaluation, monitoring data just sits there without deeper analysis or insight.

Differences between monitoring and evaluation

The main differences come down to timing, frequency, and depth of analysis.

Monitoring happens continuously during implementation. Evaluation occurs at specific points or when programs end. Monitoring focuses on ongoing management and course correction. Evaluation steps back for strategic decisions about program value and future direction.

Monitoring asks “Are we on track?” Evaluation asks “Did we succeed and what can we learn?”

M&E in practice

Designing M&E systems

Good M&E starts with clear program logic. You need frameworks that map out how your activities will lead to desired outcomes. This becomes your foundation for picking indicators and data sources.

Your objectives should be specific, measurable, achievable, relevant, and time-bound. This helps you figure out the key performance indicators you’ll use to track progress.

Creating M&E plans and budgets upfront means you have resources and systems ready before implementation starts. Too many programs try to bolt on M&E as an afterthought.

Data management and analysis

Organizations everywhere face similar M&E challenges. Data gets stored in different systems used by different teams. Marketing, operations, and program staff all collect information, but without integration, it stays fragmented.

Effective data management needs quality checks, consistent collection methods, and integrated systems that prevent information silos. Analysis should go beyond basic reporting to find trends and insights that actually inform decisions.

Participatory M&E approaches

In 2025, organizations are putting more emphasis on stakeholder involvement throughout the M&E process. It’s not just good practice anymore – it’s strategic for making sure programs stay relevant and sustainable.

Getting beneficiaries and communities involved in M&E improves data quality and increases buy-in for findings. When people help measure success, they’re more invested in achieving it.

Challenges and best practices in M&E

Common challenges

The biggest mistake is starting M&E without clear objectives and indicators. Organizations constantly underestimate the time, budget, and people needed for effective M&E.

Data quality problems plague many systems. Poor training, weak supervision, and rushed timelines create questionable data that undermines decision-making.

There’s also tension between accountability and learning. Donors want proof of success. Program managers need honest feedback about what’s not working. Balancing these can be tricky.

Emerging trends and innovations

Stakeholder engagement is becoming central to M&E, not just an add-on. Organizations recognize that involving people throughout the process isn’t just nice to have – it’s essential for programs that actually work.

Technology is changing everything through mobile data collection, big data analytics, and real-time monitoring. AI and machine learning are starting to automate analysis and spot patterns humans miss.

Best practices for effective M&E

Engage stakeholders from day one. Include them when developing M&E frameworks. Make sure they understand why M&E matters and how it helps.

Build M&E capacity inside your organization instead of relying on outside consultants. Train your own people to design, implement, and use M&E systems.

Actually use your findings to improve programs. Too many M&E reports end up on shelves instead of informing real changes. If you’re not using the data to make decisions, why collect it?

M&E in global development context

International standards and guidelines (DAC Criteria)

The OECD created six evaluation criteria that provide a framework for judging development interventions: relevance, coherence, effectiveness, efficiency, impact, and sustainability.

Here’s what each one asks:

- Relevance: Are we doing the right things?

- Coherence: How well does this fit with other efforts?

- Effectiveness: Are we achieving our objectives?

- Efficiency: Are we using resources well?

- Impact: What difference are we making?

- Sustainability: Will the benefits last?

These criteria got updated in 2019 to better capture complexity, partnerships, and systems thinking. They’re widely used but not without criticism for being too Western-focused.

M&E in sustainable development goals (SDGs)

The SDGs created massive demand for M&E data. Countries need robust monitoring systems to track progress on 17 goals and 169 targets. This sparked innovation in data collection and increased focus on standardized indicators.

The challenge is measuring complex development outcomes that don’t fit neatly into simple metrics. How do you track “reduced inequality” or “sustainable communities” in ways that work across different countries and contexts?

Conclusion – the future of M&E

M&E is evolving from a compliance exercise to a strategic tool for learning and improvement. The field is changing fast, especially as we move through 2025.

Key trends include more stakeholder engagement, real-time data collection, better technology integration, and focus on actually using findings. The field is moving toward more participatory, adaptive approaches that support continuous learning rather than just reporting.

For organizations serious about creating change, quality M&E isn’t optional anymore. The best systems become part of organizational culture, informing daily decisions and long-term strategy.

Start small but start smart. Focus on a few indicators that really matter. Build systems gradually. Always ask how M&E findings will improve your work.

The goal isn’t perfect measurement. It’s better understanding that leads to better results.

And honestly? That’s worth the effort.